Functional Requirements

- Users should be able to create a chat (with max 100 participants).

- Users should be able to send and receive messages (extended: from different devices).

- Users should be able to send and receive media files (image and video).

- Users should be able to receive messages sent when they’re offline.

Non-Functional Requirements

- Guaranteed Delivery: The system should guarantee messages delivery to users.

- Low Latency Communication: Messages should be delivered within 500ms.

- Data Persistence: The system must be fault-tolerant, ensuring no message loss.

- Scalability: The system should be scalable to support 1 billion active users, each generating approximately 100 messages per day (total ~100 billion messages daily).

API Design

WebSocket only provides a communication channel without enforcing any message structures. Application needs to implement its own protocol or message format on top of it. We’re going to use “operation/type” to model requests/response.

On server side, each of those operations will be processed based on type.

High Level Design

We’re going to start with a basic design and progressively refine it to a more optimized solution that meets all non-functional requirements.

Users should be able to start a new chat

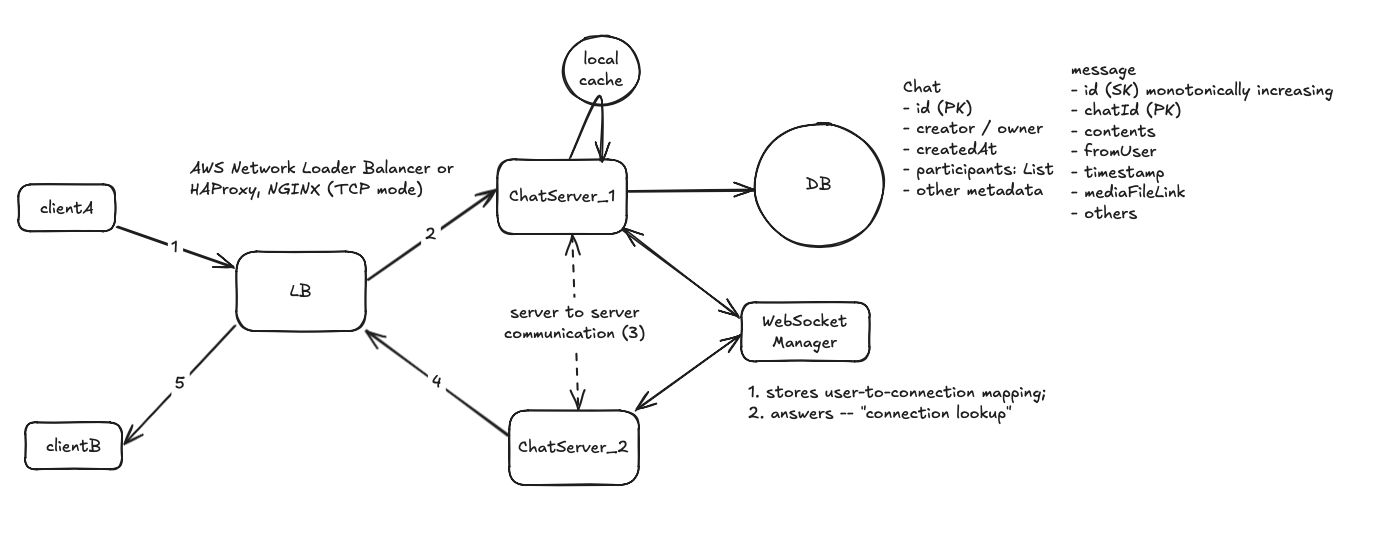

Considering the user scale (over 1B), we may need hundreds (or even thousands) chat servers. So we need L4 load balancer(s) which determine the chat server to connect to (when users establishes connection) based on the least connections. With that clarified, here is the workflow.

- The client opens the chatApp which establishes a connection to a chat server through L4 load balancer. Then the client sends the request to create_chat request through WebSocket connection.

- ChatServer receives the request and writes a new chat record in DB (chat table) and returns chatId to the client.

Users should to be able to send/receive messages

To send/receive messages between users, we need a WebSocket manager which keeps track of user and the chat server where the user is connected to. So that, chat server can query web socket manager to get the target chat server for sending messages. Here is the workflow for sending a message:

- User_A sends a send_message request through the WebSocket connection. (Assuming user_A is connected to chat server 1).

- When chat server 1 receives the request:

- It first looks up all the participants for a given chat (by querying chat table).

- For each participant, it then queries the web socket server to find out which chat server that participant is connected to (if user-connection record is not found from local cache) and update the local cache (so that it does not need to query again).

- chat server 1 forwards messages to chat server 2 using an internal server-to-server communication (considering a direct server-to-server TCP/HTTP connection).

- When chat server 2 receives the server-to-server message, it identifies that it has an active connection with userB and pushes the message through the established web socket connection to user B.

- Repeat the process until the chat server forwards the message to all participants.

This design works but has a problem — it assumes all participants are online (having an active WebSocket connection), which is not true (or not guaranteed). We’ll need a solution to solve the use-case of delivering messages to offline users. Or when users come online, they can retrieve all messages sent when they were offline.

Users should be able to receive messages sent when they’re offline

To ensure users can still retrieve the message sent they are offline, we can persist those messages in DB (in a separate table “inbox”). When those users come online, immediately after client establishing a web socket connection, clients send the first request to retrieve all history/unread messages in following workflow:

- The chat server queries the inbox table and looks for all messageId for this user.

- The chat server simply pushes all those messages to user through the already established web socket server.

The design remains mostly the same with one addition — a new inbox table which contains two columns: (1) message_id and (2) participant_id. We can create additional GSI — participant_id (PK) and message_id (SK). This ensures all message_id belonging to same user are stored in the same partition, enabling efficient lookups of finding all unread messages for a given user.

With this “offline user” support, here is new workflow of sending messages:

- User sends a sendMessage request to the chatServer.

- The chat server first queries all participants that it needs to deliver this message to based on chat_id.

- Then the chat server looks up the target/destination chat server where each of those participant is connected to.

- If an active connection is found (meaning the user is online), it forwards the message to the target chat server through server-to-server communication.

- If no action connection for a given participant (meaning the user may be offline), it writes the message to inbox table (message_id and participant_id).

- When those offline users come online, their clients look up all unread messages using the process that discussed earlier.

Recap

So far we have satisfied all functional requirements:

- support creating a new chat;

- support sending/receiving messages with optional media content;

- support receiving messages sent when users are offline.

But this design clearly has few issues.

- It’s hard to scale to support over billion active users. Because there are lots of inter-server communication for just forwarding messages between users (because they’re connected to different chat server).

- There is no mechanism to ensure “guaranteed delivery”. The chat server simply pushes messages onto web socket connection but does not know or track the message delivery. In case of network outages, the recipient may end up with never receiving this message.

- Message is persisted in the DB but storage is going to be concern (with billion of users and up-to 100 messages per user per day). We need a way to offload unnecessary storage.

- In case a user has multiple devices (desktop application, mobile etc), which device is going to take precedence for receiving messages?

- End-to-end encryption. User message is highly private and confidential and should not be intercepted by any parties in the middle. How can we enforce that?

We’ll address all those challenges with details in the Deep Dive section.

Deep Dive

The system should be scalable to support billions of active users.

This is the time for us to do back-of-envelope estimation in order to make right technical options for scaling.

- 1 billion daily active user;

- 100 messages sent per user per day on average;

- 60% of messages are 1-to-1 (one delivery per message);

- 40% of message are group messages (20 recipients per message on average);

Estimations:

- How many messages the system will be processing and delivering per second?

- total # messages sent per second:

- 1B (user) * 100 (messages per user per day) / 10^5 seconds per day = 1 M

- total # messages delivered per second:

- (1-to-1 message) 60% of 1M = 0.6 M (delivery)

- (group message) 40% of 1M * 20 (recipients) = 8 M (delivery)

- Summary:

- The system processes approximately 1 M new messages per second.

- The system delivers approximately 8.6 M (round it to 10M) messages to recipients every second.

- total # messages sent per second:

- How many chat server do we need?

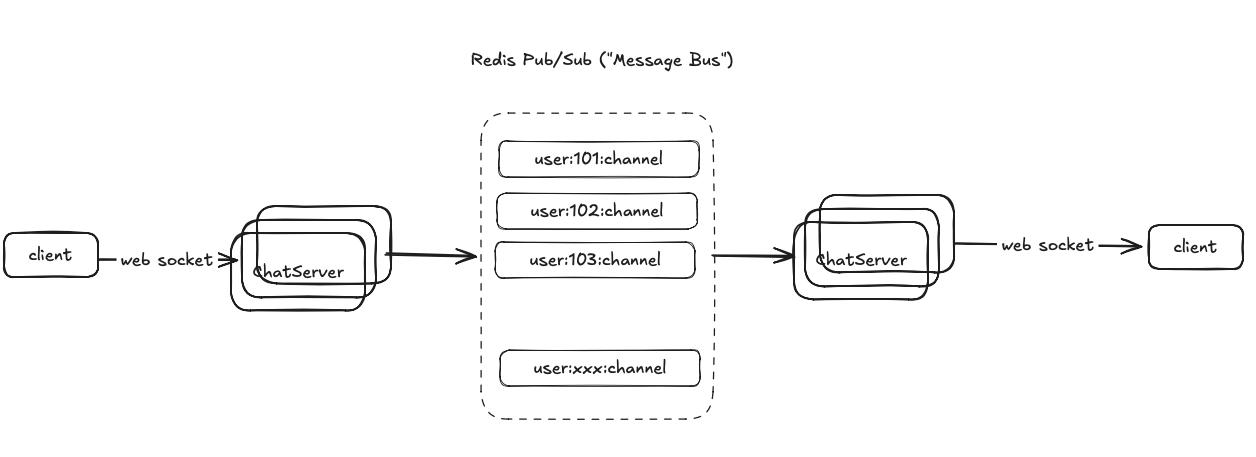

The solution is to leverage Redis Pub/Sub (”Event/Message Bus”) to efficiently reduce direct server-to-server communication in large-scale chat systems.

Here's how it works:

- Channel Creation and Subscription

- When a user connects to a chat server, the server (the recipient’s server) creates a subscription to a channel named after the user’s ID (i.e:

user:101:messages) - The channel does not need to be created in Redis beforehand. It comes into existence when the first subscription is made and gets terminated when user goes offline.

- When a user connects to a chat server, the server (the recipient’s server) creates a subscription to a channel named after the user’s ID (i.e:

- Message workflow (sending messages)

- When user101 wants to send a message to user102, the sender’s chat server check if there is a Redis channel for user102.

- If yes, sender’s chat server simply publishes a message to that channel. The recipient’s server (user 102) receives the message (because it subscribes!) and then delivers the message to user 102 through web socket connection.

- if no, sender’s chat server writes the message to “inbox” table (process remains the same as we discussed in “offline user support”).

- When user101 wants to send a message to user102, the sender’s chat server check if there is a Redis channel for user102.

In summary, each server only subscribes to channels for users concurrently connected to it. Servers don’t need to know which other server a recipient is connected to. The Redis Pub/Sub system handles the routing automatically. If a user is offline, the message will be written and stored in DB (inbox) and gets delivered to the user when they’re online. This design eliminates that huge amount of inter-server communication and also reduces the need to maintain “user-to-connected-server” mapping (solve the routing problem!)

The system should ensure guaranteed delivery.

To ensure guaranteed delivery, we uses ACK to acknowledge on message delivery. For each connected clients, when client receives a message, the client sends an ackMessage message back to the chat server through the same web socket connection. The chat server can then update message status in DB (and optionally delete the message from table).

The system should offload unnecessary storage.

In #1 deep dive, we estimated ~10M messages per day. Assume the average size for one message is ~1KB (considering message contains the link to media and media metadata), the system would need 10M * 10^5 (seconds per day) * 1 KB ~= 1,000 TB ~ 1 PB storage per day.

To offload unnecessary storage costs, we could consider following two options:

- Option 1: (Product Decision) Should we still keep the message if it’s successfully delivered? Maybe we could just delete the message from DB once it’s delivered.

- Option 2: Set an TTL on messages (i.e: 30 days) and have DB to automatically remove messages that are older than 30 days.

The system should support multiple devices for the same user.

To adapt to support “multi-client”, we can add a new participantClient table in DB with following columns

- participantId (PK)

- clientId (SK)

- clientType: (~user agent)

- status: online/offline (active or inactive)

- lastSeen (timestamp)

And we can keep re-using user-level Redis Pub/Sub channel. With multi-client support, this means, all chat servers where the user is currently connected to through different clients, need to subscribe to the same user channel. So when there is a new message, all chat server can deliver the message to all clients. Also note, supporting multiple devices could significantly increase the number of concurrent WebSocket connections. From a product perspective, we recommend two optimizations:

- Allow users to connect from multiple devices while designating a primary device for message delivery

- Implement a cap of 2-5 concurrent devices per user to prevent connection scaling issues